How to Perform a Distributed Parametric Sweep in COMSOL Multiphysics®

The standard parametric sweep limits the speedup on a cluster as it solves values one by one in a serial fashion, allowing only one parameter value to be solved at a time. To overcome this limitation, COMSOL Floating Network Licenses provide access to the Distribute parametric sweep checkbox and Cluster Sweep node. These features can be used to enable simultaneous solving of multiple parameter values. In the following article, we will guide you through the process of setting up a distributed parametric sweep.

Conceptual Overview

Before exploring distributed parametric sweeps in COMSOL Multiphysics®, it's crucial to understand Message Passing Interface (MPI) and its role. MPI is a standardized communication protocol essential for developing parallel programs. In COMSOL Multiphysics®, MPI allows us to create multiple processes that can communicate and exchange data. This enables COMSOL Multiphysics® to break down a complex job targeted for cluster computers into smaller, manageable tasks. These tasks can then be executed simultaneously across multiple nodes within the cluster, leveraging the MPI communication framework for efficient collaboration. This significantly reduces the overall computation time for large parametric sweeps.

Distributed parametric sweeps take advantage of MPI technology to solve multiple parameter values simultaneously. The number of values solved concurrently scales directly with the number of MPI processes initiated by COMSOL Multiphysics®. For instance, without MPI, COMSOL Multiphysics® would solve a parametric sweep one parameter value at a time, leading to slow solution times. However, if COMSOL Multiphysics® is launched with 5 MPI processes, each process can handle a single parameter value concurrently — effectively solving 5 values at once.

Detailed Overview

Step 1: Model Preparation

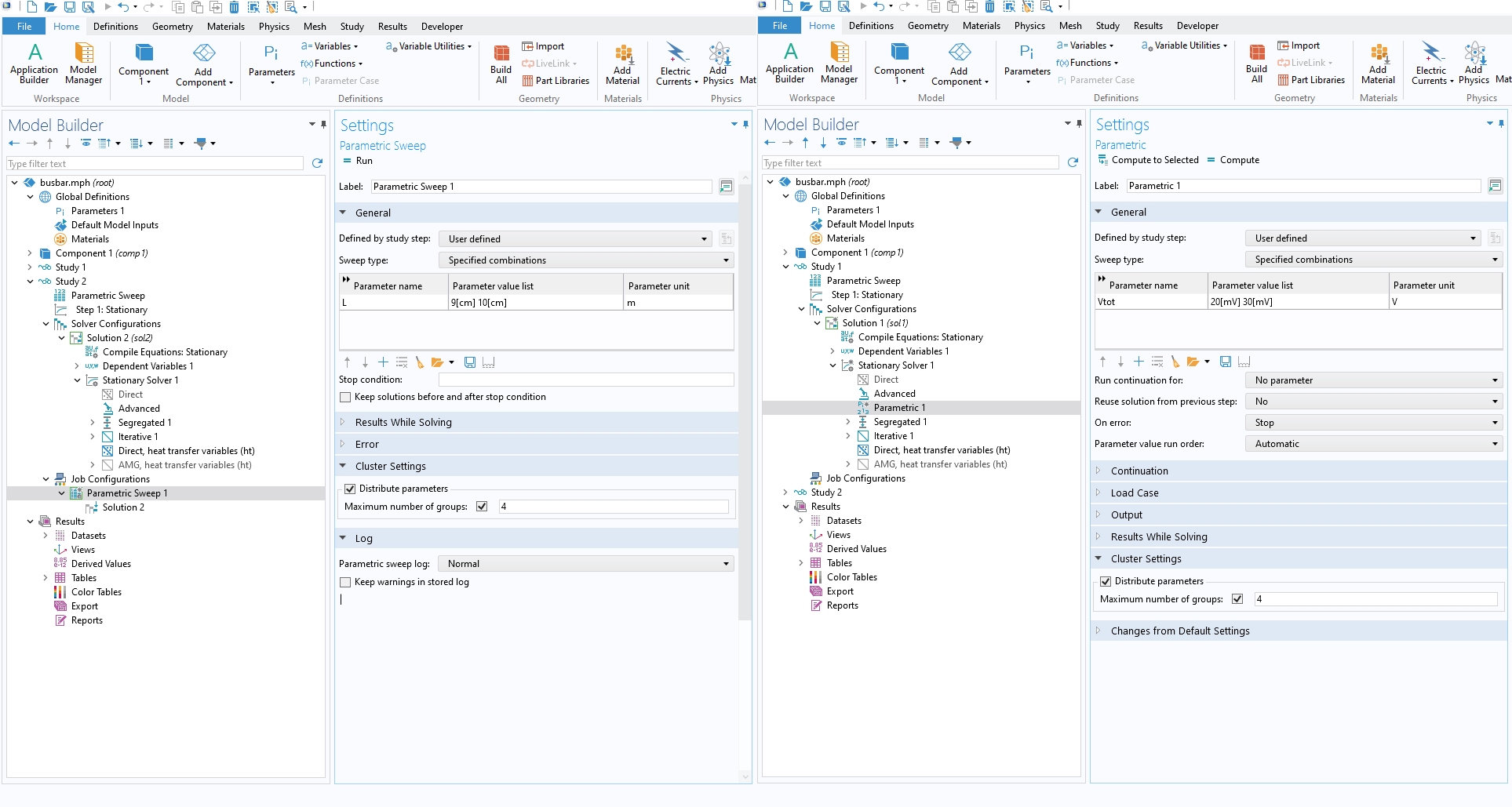

To prepare a model for a distributed parametric sweep, you'll have to incorporate a Parametric Sweep node into your model, much like you would for a standard parametric sweep. The only extra requirement is to check the Distribute parametric sweep checkbox located within the Advanced Settings section of the Parametric Sweep node. The Batch and Cluster option must be checked in the Show More Options window to make the Distribute parametric sweep checkbox available. You can open that window from the Model Builder toolbar.

Step 2: Run the COMSOL Multiphysics® Job

To run a COMSOL Multiphysics® job in distributed mode (with MPI) from the command line on Linux, use the following command:

comsol batch -nn 4 -nnhost 1 -f hostfile -np 8 -inputfile example.mph -outputfile example_solved.mph -batchlog logfile.log -createplots

This command will start a COMSOL Multiphysics® job without a GUI (batch), using 4 MPI processes, -nn 4, with one MPI process per host in the cluster, -nnhost 1, and using 8 cores per MPI process, -np 8. The hostfile contains a list of the hostnames of the compute nodes in the cluster, -f hostfile, where COMSOL Multiphysics® can start MPI processes. COMSOL Multiphysics® will solve the model example.mph, save the results to example_solved.mph, and write the solver log to logfile.log. The last option -createplots makes sure that default plots are created in the output model, example_solved.mph.

If you use a scheduler to manage jobs on your cluster, you can potentially replace the -nn, -nnhost, and -f flags and have COMSOL Multiphysics® read parallel configurations directly from the scheduler. For more information, see the Running COMSOL® in Parallel on Clusters knowledge base article.

Optimize the Values of ‑nn, ‑nnhost, and ‑np

Our discussion has covered the significance of the -nn, -nnhost, and -np flags, but we've yet to explore how to select the optimal values for these command line flags to optimize model performance. This selection process depends on two critical factors: the specific attributes of your COMSOL Multiphysics® model and the computational resources available within the cluster where you plan to run the model.

Choosing the Right ‑np Value

To begin, perform preliminary test runs using a single parameter value on a single node of a cluster. The main goal here is to determine the minimum number of cores and RAM that will give the best performance. This involves running the single parameter value multiple times, varying the number of cores utilized each time, and carefully noting the solution time and RAM consumption for each run. A sample command looks like this:

comsol batch -np <number of cores> -inputfile example.mph -outputfile example_solved.mph -batchlog logfile.log

In these runs, systematically vary the number of cores used. For each run, be sure to record the solution time and RAM utilization from the log file.

By analyzing these test runs, you'll likely see a connection between the ideal core count and the number of degrees of freedom (DOF) in your model. As the number of unknowns (DOF) in your model with single parameter value increases, you'll generally find that using more cores leads to better performance. There's a limit to this, however: Adding even more cores eventually won't provide speedups. Communication overhead between cores will start to outweigh the benefits of parallelization. You can see more information in the Knowledge Base article COMSOL and Multithreading.

The key takeaway here is to identify the lowest core count that delivers the fastest solution time for your single parameter value. This is the optimal core count you should use when setting the -np flag for distributed parametric sweeps.

Choosing the Right ‑nnhost Value

The -nnhost flag controls how many parameter values are run concurrently on a single physical node within your cluster. To determine the optimal -nnhost value, consider the computational resources required by a single parameter value compared to the resources available on a single node.

For example, if a single parameter value requires 10 cores and 25 GB of RAM, and a single node in your cluster has 32 cores and 128 GB of RAM, you can confidently set -nnhost to 3. This effectively utilizes the node's resources by running three concurrent parameter values.

Choosing the Right ‑nn Value

Lastly, the value of -nn depends on the number of parameter values you plan to handle simultaneously. Building upon the previous example, if you have three cluster nodes available for job execution and each node can efficiently handle three parameter values in parallel, you can set the -nn value to 9, indicating that nine parameter values will be solved concurrently.

Distributing A Parameter Value

So far, we have discussed scenarios where the hardware resources available on a single node of a cluster are sufficient to solve a parameter value. However, there are exceptions:

- RAM limitations: A single parameter value might require more RAM than a single node offers.

- High core requirement: The problem size might necessitate more cores than a single cluster node provides, reaching into the millions of degrees of freedom.

In these scenarios, you'll need to start 1 MPI process per node of the cluster. To solve a single parameter value, 2 MPI processes running on different nodes of the cluster must cooperate. For instance, if a single parameter values requires about 200 GB of RAM but a single node in your cluster has only 128 GB of RAM, you'll need to pool RAM from 2 nodes to solve it. In such a case, set -nnhost to 1 so that each node of the cluster has an MPI process running. Then, set the Cluster Settings section > Maximum number of groups option to a value where the ratio of the total number of MPI processes started to the maximum number of groups equals 2, as 2 nodes need to cooperate.

To elaborate further, suppose you have a cluster of 12 nodes available for a distributed parametric sweep, but a single parameter value requires 3 nodes to run. In this scenario, you would set the Maximum number of groups to 4, so that 4 parameter values are handled simultaneously, with each parameter value using 3 nodes to solve. The batch call should look like the following:

comsol batch -nn 12 -nnhost 1 -f hostfile -np 32 -inputfile example.mph -outputfile example_solved.mph -batchlog logfile.log -createplots

The Maximum number of groups setting is available under the Cluster Settings section of a Parametric node (located under the Solver Configurations node) or Parametric Sweep node (located under the Job Configurations node).

Note: The location of the Maximum number of groups setting can vary depending on the model. It could be found in the Cluster Settings section of a Parametric node, found under the Solver Configurations node, or of a Parametric Sweep node under the Job Configurations node. The location of the setting is determined by an automatic dependency analysis which formulates the sweep. Right-clicking on the Study node after adding a Parametric Sweep node will allow you to select the Show Default Solver option. Selecting this option will create the solver configuration and job configurations (if needed) required to run the study. The default solver nodes can be reviewed to determine if the model includes the setting under the Solver Configurations or Job Configurations node.

Distributed Mode: Cluster Computing vs. Batch Calls

The workflow discussed above used batch calls from the command line to launch the COMSOL Multiphysics® job in distributed mode. Alternatively, you can configure and add a cluster computing node directly within your study alongside the Parametric Sweep node. This allows you to initiate cluster jobs directly from your local machine. The cluster computing node streamlines the process by automatically transferring files from your local machine to the HPC cluster, generating and executing the commands necessary to launch COMSOL Multiphysics® in distributed mode and providing updates on the cluster job's progress directly on your local GUI. This functionality supports popular scheduler software like SLURM, OGS/GE, LSF, PBS, and Microsoft® HPC Pack.

Further Learning

If you are interested in learning more about cluster or cloud computing with COMSOL Multiphysics®, our blog post How to Run on Clusters from the COMSOL Desktop® Environment provides a more in-depth guide on setting up a cluster computing node. We also have several other blog posts covering cluster and cloud computing.

请提交与此页面相关的反馈,或点击此处联系技术支持。